A Hands-On Guide by Sarah Rodriguez

Hey everyone! Sarah Rodriguez here. After 15 years working with mainframe systems—starting at the console and now bridging that world with modern DevOps—I’m excited to walk you through the skills that transformed my career. This isn’t theory from a textbook; this is what actually works when you’re building APIs on top of mainframe data.

Let me be real with you: when I first started looking at DB2 and API development, I was intimidated. But here’s what I learned—you don’t need to know everything at once. We’re going to build this knowledge step by step, just like I did.

Week 9-10: Getting Your Hands Dirty with DB2

Understanding DB2 Architecture (Without the Overwhelm)

Day 64-66: The DB2 Hierarchy – Your Mental Model

Alright, let’s start with how DB2 actually organizes things. When I was learning this, I kept a sticky note on my monitor with this hierarchy—it saved me constantly:

Think of DB2 like a filing system:

- Storage Groups = The filing cabinet (physical storage)

- Databases = Drawers in that cabinet (logical groupings)

- Table Spaces = Folders in the drawer (where tables live)

- Index Spaces = Your index cards (separate but pointing to the data)

- Tables = The actual documents (your data)

- Indexes = Quick-reference guides (for speed)

Here’s what tripped me up at first: DB2 subsystems. You’ll see names like DS01 (Development), TS01 (Test), PS01 (Production). Each one has the same table structures but different data. This is huge for testing without messing up production!

Let’s explore what you’ve got:

sql

-- First, connect to your subsystem

DB2

-- See what subsystem you're in

DISPLAY GROUP

-- Check out your databases (start with system ones)

SELECT * FROM SYSIBM.SYSDATABASE WHERE NAME LIKE 'DSN%';

-- Look at table spaces (DSNDB04 is a good starting point)

SELECT DBNAME, NAME, TYPE, STATUS

FROM SYSIBM.SYSTABLESPACE

WHERE DBNAME = 'DSNDB04';My advice: Run these queries and just look around. Get comfortable before you start building anything.

Day 67-70: Designing Schemas That Won’t Haunt You Later

Okay, here’s where I want to save you from my mistakes. When you’re building APIs that talk to DB2, schema design matters way more than you think.

The game-changer for me: Schema separation

Instead of throwing everything into one schema, organize by domain:

CUSTOMER_API– All customer-related stuffPRODUCT_API– Product dataTRANSACTION_API– Transaction records

Why? Because when you’re building APIs later, you can control access at the schema level. Security becomes way easier.

Let’s build something real:

sql

-- Create your API schemas

CREATE SCHEMA CUSTOMER_API AUTHORIZATION DB2ADMIN;

CREATE SCHEMA PRODUCT_API AUTHORIZATION DB2ADMIN;

-- Work in the customer schema

SET SCHEMA = CUSTOMER_API;

-- Here's a customer table designed for APIs

CREATE TABLE CUSTOMERS (

CUSTOMER_ID CHAR(10) NOT NULL PRIMARY KEY,

CUSTOMER_TYPE CHAR(1) NOT NULL CHECK (CUSTOMER_TYPE IN ('I','C')),

FIRST_NAME VARCHAR(50),

LAST_NAME VARCHAR(50) NOT NULL,

EMAIL_ADDRESS VARCHAR(100),

PHONE_NUMBER VARCHAR(20),

DATE_CREATED TIMESTAMP NOT NULL DEFAULT CURRENT TIMESTAMP,

DATE_MODIFIED TIMESTAMP NOT NULL DEFAULT CURRENT TIMESTAMP,

STATUS CHAR(1) NOT NULL DEFAULT 'A' CHECK (STATUS IN ('A','I','S')),

VERSION_NUMBER INTEGER NOT NULL DEFAULT 1

);See what I did there?

CUSTOMER_TYPE: ‘I’ for Individual, ‘C’ for Corporate (makes APIs cleaner)STATUS: ‘A’ Active, ‘I’ Inactive, ‘S’ Suspended (you’ll thank me later)VERSION_NUMBER: Critical for optimistic locking in APIs- Timestamps with defaults (no more null date bugs!)

Now add addresses (relationships matter!):

sql

CREATE TABLE CUSTOMER_ADDRESSES (

ADDRESS_ID INTEGER NOT NULL PRIMARY KEY,

CUSTOMER_ID CHAR(10) NOT NULL,

ADDRESS_TYPE CHAR(1) NOT NULL CHECK (ADDRESS_TYPE IN ('H','W','M')),

STREET_ADDRESS_1 VARCHAR(100),

STREET_ADDRESS_2 VARCHAR(100),

CITY VARCHAR(50),

STATE_PROVINCE VARCHAR(50),

POSTAL_CODE VARCHAR(20),

COUNTRY_CODE CHAR(2) DEFAULT 'US',

IS_PRIMARY CHAR(1) DEFAULT 'N' CHECK (IS_PRIMARY IN ('Y','N')),

DATE_CREATED TIMESTAMP NOT NULL DEFAULT CURRENT TIMESTAMP,

FOREIGN KEY (CUSTOMER_ID) REFERENCES CUSTOMERS(CUSTOMER_ID)

);

-- Don't forget indexes! APIs need speed.

CREATE UNIQUE INDEX IX_CUSTOMER_EMAIL ON CUSTOMERS(EMAIL_ADDRESS);

CREATE INDEX IX_CUSTOMER_TYPE_STATUS ON CUSTOMERS(CUSTOMER_TYPE, STATUS);

CREATE INDEX IX_ADDRESS_CUSTOMER ON CUSTOMER_ADDRESSES(CUSTOMER_ID);Real talk: That last index on CUSTOMER_ID in addresses? I forgot it on my first project. Queries were slow. Don’t be me.

Day 71-73: SQL That Powers APIs

This is where it gets fun. Modern DB2 can return JSON directly from SQL. When I discovered this, it changed everything.

Building API-ready queries:

sql

-- Complex customer data with JSON output

WITH CUSTOMER_SUMMARY AS (

SELECT

c.CUSTOMER_ID,

c.FIRST_NAME,

c.LAST_NAME,

c.EMAIL_ADDRESS,

c.STATUS,

COUNT(a.ADDRESS_ID) as ADDRESS_COUNT

FROM CUSTOMER_API.CUSTOMERS c

LEFT JOIN CUSTOMER_API.CUSTOMER_ADDRESSES a

ON c.CUSTOMER_ID = a.CUSTOMER_ID

GROUP BY c.CUSTOMER_ID, c.FIRST_NAME, c.LAST_NAME,

c.EMAIL_ADDRESS, c.STATUS

)

SELECT

JSON_OBJECT(

'customerId' VALUE CUSTOMER_ID,

'name' VALUE JSON_OBJECT(

'firstName' VALUE FIRST_NAME,

'lastName' VALUE LAST_NAME

),

'contactInfo' VALUE JSON_OBJECT(

'email' VALUE EMAIL_ADDRESS

),

'addressCount' VALUE ADDRESS_COUNT

) as CUSTOMER_JSON

FROM CUSTOMER_SUMMARY

WHERE STATUS = 'A'

FETCH FIRST 50 ROWS ONLY; -- Pagination!What’s happening here:

- CTE (

WITHclause) summarizes customer data JSON_OBJECTbuilds nested JSON structuresFETCH FIRST 50 ROWS= pagination for APIs

Pro tip: Start simple. Get one table working with JSON, then add complexity.

Stored Procedure for APIs (This Saved Me Hours):

sql

CREATE PROCEDURE CUSTOMER_API.GET_CUSTOMER_DETAILS(

IN P_CUSTOMER_ID CHAR(10),

OUT P_RESULT_JSON CLOB(32K)

)

LANGUAGE SQL

BEGIN

DECLARE v_count INTEGER DEFAULT 0;

-- Check existence first (avoid ugly errors)

SELECT COUNT(*) INTO v_count

FROM CUSTOMER_API.CUSTOMERS

WHERE CUSTOMER_ID = P_CUSTOMER_ID;

IF v_count = 0 THEN

-- Return error as JSON

SET P_RESULT_JSON = JSON_OBJECT(

'error' VALUE 'Customer not found',

'customerId' VALUE P_CUSTOMER_ID

);

ELSE

-- Build full customer JSON with addresses

SELECT JSON_OBJECT(

'customer' VALUE JSON_OBJECT(

'customerId' VALUE c.CUSTOMER_ID,

'name' VALUE JSON_OBJECT(

'firstName' VALUE c.FIRST_NAME,

'lastName' VALUE c.LAST_NAME

),

'addresses' VALUE JSON_ARRAY(

SELECT JSON_OBJECT(

'addressId' VALUE a.ADDRESS_ID,

'type' VALUE a.ADDRESS_TYPE,

'city' VALUE a.CITY,

'state' VALUE a.STATE_PROVINCE

)

FROM CUSTOMER_API.CUSTOMER_ADDRESSES a

WHERE a.CUSTOMER_ID = c.CUSTOMER_ID

)

)

) INTO P_RESULT_JSON

FROM CUSTOMER_API.CUSTOMERS c

WHERE c.CUSTOMER_ID = P_CUSTOMER_ID;

END IF;

END;Why I love this:

- Error handling is built in (no exceptions to catch)

- Returns JSON (perfect for APIs)

- One call, complete data (customer + addresses)

Day 74-77: Performance – Because Slow APIs = Angry Users

Let me share what I learned the hard way: Indexes are your best friend, but you need the right ones.

My performance monitoring setup:

sql

-- Track API performance in DB2

CREATE TABLE CUSTOMER_API.API_PERFORMANCE_LOG (

LOG_ID INTEGER GENERATED ALWAYS AS IDENTITY PRIMARY KEY,

API_ENDPOINT VARCHAR(100) NOT NULL,

CUSTOMER_ID CHAR(10),

START_TIME TIMESTAMP NOT NULL,

END_TIME TIMESTAMP,

RESPONSE_TIME_MS INTEGER,

STATUS_CODE INTEGER

);

-- Log performance (call this from your stored procs)

CREATE PROCEDURE CUSTOMER_API.LOG_API_PERFORMANCE(

IN P_ENDPOINT VARCHAR(100),

IN P_CUSTOMER_ID CHAR(10),

IN P_START_TIME TIMESTAMP,

IN P_END_TIME TIMESTAMP,

IN P_STATUS_CODE INTEGER

)

LANGUAGE SQL

BEGIN

DECLARE v_response_time INTEGER;

SET v_response_time =

INTEGER(MICROSECOND(P_END_TIME - P_START_TIME) / 1000);

INSERT INTO CUSTOMER_API.API_PERFORMANCE_LOG (

API_ENDPOINT, CUSTOMER_ID, START_TIME, END_TIME,

RESPONSE_TIME_MS, STATUS_CODE

) VALUES (

P_ENDPOINT, P_CUSTOMER_ID, P_START_TIME, P_END_TIME,

v_response_time, P_STATUS_CODE

);

END;Finding your slow spots:

sql

-- What's slow?

SELECT API_ENDPOINT,

AVG(RESPONSE_TIME_MS) as AVG_MS,

MAX(RESPONSE_TIME_MS) as MAX_MS,

COUNT(*) as CALLS

FROM CUSTOMER_API.API_PERFORMANCE_LOG

WHERE START_TIME >= CURRENT_TIMESTAMP - 1 DAY

GROUP BY API_ENDPOINT

ORDER BY AVG_MS DESC;My rule: If average response time > 500ms, something needs fixing (index, query, or both).

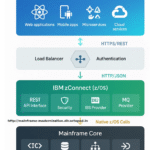

Week 11-12: z/OS Connect – The Bridge You Need

Making Mainframe Programs Talk REST

Day 78-80: Setting Up z/OS Connect

Okay, real talk: z/OS Connect intimidated me at first. But once I understood it’s just Liberty server with special sauce, everything clicked.

The architecture (simplified):

- Liberty Server = Your Java runtime

- Service Providers = Connectors to CICS, DB2, IMS

- API Layer = REST endpoints that call your COBOL

Basic server.xml (this is your foundation):

xml

<server description="z/OS Connect Server">

<featureManager>

<feature>zosConnect-2.0</feature>

<feature>ssl-1.0</feature>

<feature>appSecurity-2.0</feature>

</featureManager>

<!-- HTTP endpoints -->

<httpEndpoint id="defaultHttpEndpoint"

httpPort="8080"

httpsPort="8443"

host="*" />

<!-- SSL (don't skip this in production!) -->

<ssl id="defaultSSLConfig"

keyStoreRef="defaultKeyStore" />

<keyStore id="defaultKeyStore"

location="key.p12"

type="PKCS12"

password="{aes}your_encrypted_password" />

<!-- z/OS Connect settings -->

<zosconnect_zosConnectManager

requireAuth="true"

invokeTimeout="300000" />

<!-- Logging (trust me, you want good logs) -->

<logging traceSpecification="*=info:com.ibm.zosconnect.*=all"

maxFileSize="20"

maxFiles="10" />

</server>What I always configure:

invokeTimeout="300000"(5 minutes – some batch jobs are slow!)- Logging to

allfor z/OS Connect (debugging is easier) - SSL always (even in dev – practice secure habits)

Day 81-84: Connecting to Your Mainframe Resources

This is where we connect z/OS Connect to CICS, DB2, whatever you’ve got.

DB2 Connection (start here, it’s easiest):

xml

<!-- DB2 data source -->

<dataSource id="db2DataSource"

jndiName="jdbc/DB2DataSource">

<jdbcDriver libraryRef="DB2JCCLib" />

<properties.db2.jcc

databaseName="DSN1"

serverName="db2.company.com"

portNumber="446"

user="${db2.user}"

password="${db2.password}" />

<connectionManager

maxPoolSize="50"

minPoolSize="10"

connectionTimeout="30s" />

</dataSource>

<!-- z/OS Connect DB2 provider -->

<zosconnect_db2ServiceProvider id="db2Provider"

dataSourceRef="db2DataSource" />Connection pooling settings that work:

maxPoolSize="50"– Handles burst trafficminPoolSize="10"– Always readyconnectionTimeout="30s"– Fail fast if DB2 is down

CICS Connection (if you have CICS programs):

xml

<zosconnect_cicsServiceProvider id="cicsProvider"

enabler="CICSTransactionGateway"

gatewayUrl="https://cics.company.com:9080"

userName="${cics.user}"

password="${cics.password}"

maxConnections="10" />Day 85-87: Turning COBOL Programs into REST APIs

This is the magic moment. Let’s say you have a COBOL program CUSTINQ that looks up customers.

The COBOL (simplified):

cobol

IDENTIFICATION DIVISION.

PROGRAM-ID. CUSTINQ.

LINKAGE SECTION.

01 LS-INPUT-DATA.

05 LS-CUSTOMER-ID PIC X(10).

05 LS-REQUEST-TYPE PIC X(1).

01 LS-OUTPUT-DATA.

05 LS-RESPONSE-CODE PIC 9(3).

05 LS-RESPONSE-MESSAGE PIC X(100).

05 LS-CUSTOMER-DETAILS.

10 LS-OUT-FIRST-NAME PIC X(50).

10 LS-OUT-LAST-NAME PIC X(50).

10 LS-OUT-EMAIL PIC X(100).

PROCEDURE DIVISION USING LS-INPUT-DATA, LS-OUTPUT-DATA.

-- Your business logic here

GOBACK.Turn it into a REST service:

Create customer-inquiry-service.json:

json

{

"serviceName": "customerInquiry",

"serviceDescription": "Customer inquiry service",

"serviceProvider": "cicsProvider",

"program": "CUSTINQ",

"requestSchema": {

"type": "object",

"properties": {

"customerId": {

"type": "string",

"maxLength": 10

},

"requestType": {

"type": "string",

"enum": ["I", "U"]

}

},

"required": ["customerId", "requestType"]

},

"responseSchema": {

"type": "object",

"properties": {

"responseCode": {"type": "integer"},

"responseMessage": {"type": "string"},

"customerDetails": {

"type": "object",

"properties": {

"firstName": {"type": "string"},

"lastName": {"type": "string"},

"email": {"type": "string"}

}

}

}

}

}Deploy it:

bash

# Copy service definition to z/OS Connect

# Restart Liberty server

# Test it!

curl -X POST https://your-server:8443/zosConnect/services/customerInquiry \

-H "Content-Type: application/json" \

-d '{"customerId":"CUST000001","requestType":"I"}'Boom. Your COBOL program is now a REST API. First time I did this, I literally said “Holy Karamba!” out loud.

Wrapping Up: You’ve Got the Foundation

Alright, let’s take a breath here. If you’ve made it through DB2 schema design, SQL optimization, and z/OS Connect configuration, you’ve already accomplished something huge. Seriously.

When I was at this stage in my journey, I remember feeling both excited and overwhelmed. You’ve just learned how to:

✓ Design mainframe databases that work beautifully with modern APIs

✓ Write SQL that returns JSON (game-changer!)

✓ Configure z/OS Connect to bridge COBOL and REST

✓ Turn legacy programs into callable services

Here’s what I wish someone had told me at this point: You don’t need to be perfect at all of this. You need to be functional enough to start building real things.

My Advice Moving Forward

Start small. Pick one COBOL program or one DB2 table. Build one API endpoint. Get it working. Then build the next one. I see too many people trying to architect the perfect system from day one—that’s not how this works.

Document as you go. I keep a personal wiki (just markdown files) with every issue I’ve solved. “DB2 connection pool maxed out? Here’s what I did.” Future you will be grateful.

Find your community. Whether it’s a mainframe user group, a Slack channel, or just a couple of colleagues who are on this journey—talk to people. The mainframe community is smaller than web dev, which means people actually remember each other and help out. Use that.

What’s Next?

In the next part of this series, I’m going to show you:

- Zowe CLI – Command-line automation that’ll make you feel like a wizard

- API Mediation Layer – Enterprise-grade API management (this is where it gets serious)

- Service orchestration – Combining multiple mainframe services into powerful workflows

- Production-ready monitoring – Because if you can’t measure it, you can’t improve it

But for now? Practice what you’ve learned. Set up a test environment. Break things. Fix them. That’s how you really learn.

See you in the next article. And hey—if you run into issues, that’s normal. That’s not failure, that’s learning. Trust me, I’ve been there.

Keep pushing forward,

Sarah Rodriguez

Mainframe operator turned DevOps advocate | Making legacy systems sing in modern environments